Robots.txt

Robots.txt allows you to choose which pages of your online documentation portal will be indexed and appear in search engines. For example, if you are using your ClickHelp portal to create context help, it would make sense to hide topics with such content from the search engines.

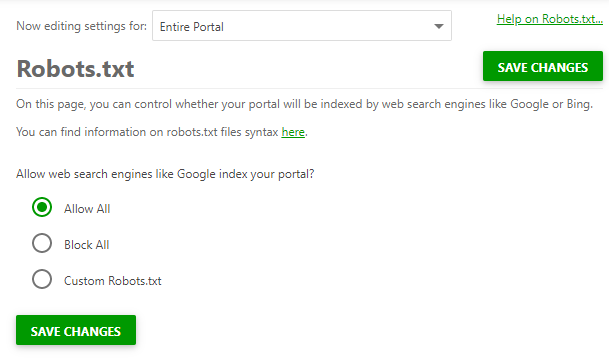

To set robots.txt up, go to Settings → Content Search → Robots.txt

As you can see on the screenshot above, you can allow all the pages on your portal to be indexed by the search engines or forbid indexing the whole portal.

The third option called Custom Robots.txt means that you can manually enumerate the pages you do not want to be indexed. For example, you can use this option to hide some specific help topics from search engines like this:

Code |

User-agent: * |

Learn more on the syntax of the robots.txt file here: Robots.txt Introduction and Guide.