Online Documentation and SEO. Part 2 – Crawler-Friendly Pages

ClickHelp Teamin SEO Lifehacks on 8/9/2013 — 1 minute read

ClickHelp Teamin SEO Lifehacks on 8/9/2013 — 1 minute read ClickHelp Teamin SEO Lifehacks on 8/9/2013 — 1 minute read

ClickHelp Teamin SEO Lifehacks on 8/9/2013 — 1 minute read

This is a continuation of a blog post series on the SEO aspect of technical writing. You can find the first post here: Part 1 – Human-Readable URLs.

Web crawlers used by search engines request web pages by URL. At that, most of the search spiders do not run any scripts when loading pages…

It’s like when you disable scripts in your web browser, and browse pages. If some pages heavily rely on scripts to render their contents, the web crawlers may get incomplete or incorrect page contents. This makes your SEO efforts of very little value to the end result.

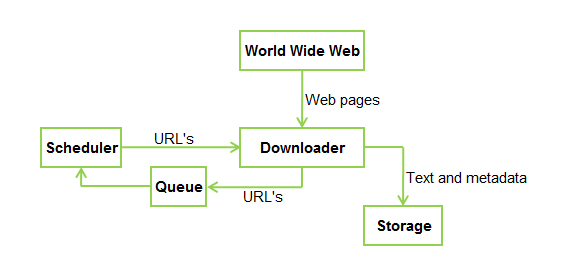

This is what the architecture of a standard Web crawler looks like:

This does not mean that you can’t use scripts in your online documentation. Scripts may give some nice functions and visual effects to the help topic contents. At that, it certainly makes sense to have a script-free version of those pages for the search engines to properly index the contents. In ClickHelp, we have put the needed attention to these specifics, and made sure the web crawlers get the topic contents properly. So, when using ClickHelp to create online documentation, you don’t need to bother about web crawlers not running scripts.

Another important note – don’t forget about metadata with the “robots” name. The value of this meta tag can significantly change the web crawlers behavior. For example, <meta name="googlebot" content="noindex" /> prevents the page from being indexed by Google crawlers (read more at support.google.com).

One more important note is related to the robots.txt file. But we will write about it in one of the next blog posts. Stay tuned!

Happy Technical Writing!

ClickHelp Team

Get monthly digest on technical writing, UX and web design, overviews of useful free resources and much more.

"*" indicates required fields